How does data from the Deep Web go from results to actionable intelligence?

Welcome to the first of a series of blog postings about how you take data from harvesting to actionable intelligence. This three part series will follow data from a completely unformatted, unstructured web page through the three step process that data follows to be made into actionable intelligence.

- Stage 1 (this post) = Harvesting

- Stage 2 = Normalizing

- Stage 3 = Reporting and Analytics

The Harvesting Tool

Let’s get started uncovering the first stage, the harvesting stage, by learning more about the tool that is used, the Deep Web Harvester.

BrightPlanet’s Deep Web Harvester is able to automate the process of issuing queries (searches of site specific search functions) to websites and collecting the results of those queries. Performing automated directed querying into sites becomes very important when harvesting on a large scale, especially when directed querying is compared to traditional collection technologies, such as link following which is used by Google.

Link Following

Link following, also known as spidering, is widely used in the web data collection community but is very inefficient. Deep Web harvests perform directed queries and harvest only the relevant results. Link following navigates to every single page it can find via hyperlinks, determines if the content is relevant, and then harvests the content if it is deemed relevant.

With traditional link following capabilities, collecting content would take hours for one site alone. Google reports that the New York Times site has over 12 million unique web pages within that single domain.

Manual Deep Web Harvesting

To simulate what actions the Deep Web Harvester performs, do the following. Go to a popular newspaper site such as the New York Times. Enter in a search term and wait for the results. View all the results on every single results page and copy and paste all the content into a document. Depending on your search term, it could take days. BrightPlanet’s Deep Web Harvester can do it automatically.

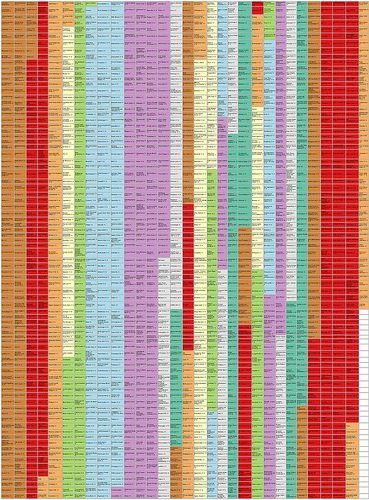

Fortune 200 Companies – 50 Newspapers – 5 Hours

Here is an example of a large scale data harvest by the Deep Web Harvester.

BrightPlanet collected news stories published about Fortune 200 companies. We issued the name of each Fortune 200 company into the top 50 newspapers as a Deep Web query and collected the content. All of the text-based content in each of the results on all of the results pages was harvested. This is a large scale version of you copying and pasting all the text from each of your results into a document.

The Harvest Specifications

Because of BrightPlanet’s pre-existing Source Repository, we did not have to perform any custom configuration to the sources (the newspapers’ website searches in this case). The Deep Web Harvester applied each of the 200 queries (Fortune 200 company names) individually to the 50 newspaper sites. It harvested the results of these query searches individually making a total of 10,000 (200 companies x 50 newspapers) different individual queries that BrightPlanet’s Deep Web Harvester applied.

The Results

BrightPlanet harvested a total of 125,600 news articles mentioning Fortune 200 companies from the 50 newspaper sources in less than 5 hours.

Next Stop: Normalizing

Now that the data is harvested – what happens next? Learn next week how we take harvested data and normalize it (organize it) into a semi-structured format.

//