The Data Enrichment Process Explained

Being in the business of data collection, most people’s interest in our technology has been focused on the process of how we harvest content. The truth is most of the value from harvesting both Surface Web and Deep Web data comes from what is done with it after the content itself is harvested; which is why we wanted to give you an overview of the data enrichment process.

Step 1: Normalization

As you likely know, data on the Web today exists in many different file formats. Standard webpages exist as HTML, Word documents are uploaded, Powerpoint files are shared, PDFs are posted, etc. Hundreds of different file formats like these make for an interesting challenge when working to collect Web data and analyze it.

The first step in BrightPlanet’s enrichment process is extracting all the important text from these file formats and getting the text into one unstructured, standard data type. This step in the enrichment process is called normalization.

The process of normalization, which may sound simple, comes with its own unique set of challenges. Most of those challenges involve determining the exact text the end user wants to extract from the specific webpages.

Take a news article for example, simply extracting and storing all of the text to be analyzed from a standard webpage containing a news article will present some problems as the end user isn’t interested in everything on the page. Text in the navigation, including links and titles of most recent articles, will create noise down the road when trying to analyze the harvested text.

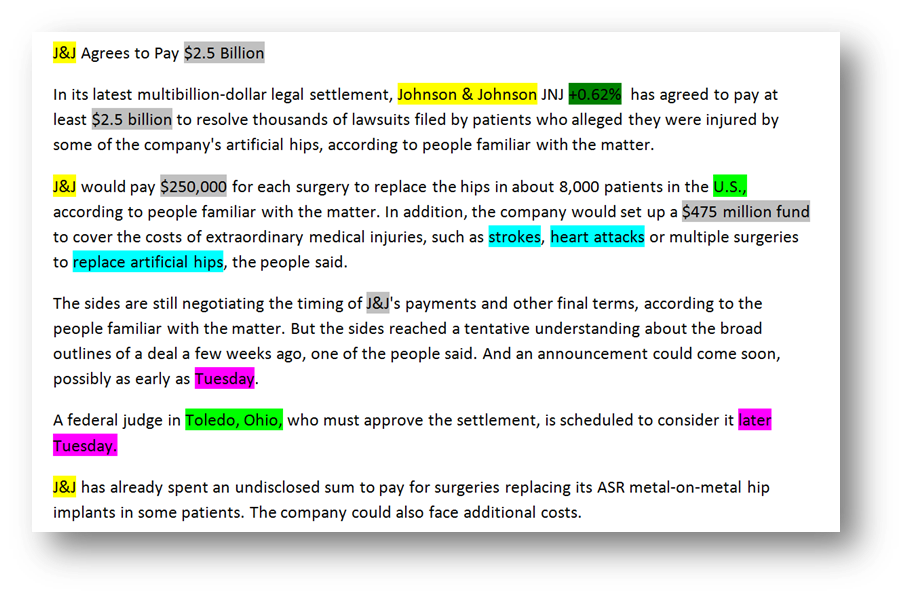

To fix this problem, we have made it so our harvester extracts just the body of the document and drops the other items on the page not related to the actual content in the news story being harvested. The images to the right show a news article to be harvested and the resulting unstructured text ready for entity extraction.

Once the normalization process is completed, all the data exists in a mostly unstructured state, meaning it’s simply a block of text. A third party analysis visualization or modeling tool would have a very difficult time working with the data in its current state as the data exists as a block of text, some further tuning and structuring of the data needs to occur before attempting to further analyze the data set. To help make the data more effective and usable, we start the enrichment process – entity extraction.

Step 2: Entity Extraction

To give the data more structure so that it can be used within an analytic tool, we turn to a process called entity extraction. Entity extraction, put simply, is the process of identifying key terms within the data that have value. To assist with this step, BrightPlanet has partnered with IMT Holdings and their Rosoka Technology, which is built directly into our harvest workflow.

The specific entities or terms that are extracted vary heavily based on the customer requirements, but some examples of common entities are: people, companies, and places.

If a person was to try and do entity extraction manually, they would have to go through the data sentence by sentence highlighting the terms that are valuable to them. Manually extracting entities would be impossible at the size and scope that BrightPlanet harvests documents. Fortunately, our partner’s integrated entity extraction technology is highly scalable. On average we have seen sub-second processing times on most results. For the more technical readers out there, the entity extraction stage can also be parallelized allowing for a highly scalable enrichment process.

Taking the data a step further

An important thing to note about entity extraction is that our partner’s entity extraction does not only tag co-mentions of entities, but also relationships that may be occurring between entities. Take a look at the following paragraph that is an excerpt from an article about a Syrian disease outbreak from the BBC.

“The World Health Organization (WHO) has confirmed 10 cases of polio in war-torn Syria – the first outbreak in the country in 14 years. The UN body says a further 12 cases are still being investigated. Most of the 22 people who have been tested are babies and toddlers. Before Syria’s civil war began in 2011, some 95% of children were vaccinated against the disease. The UN now estimates 500,000 children have not been immunised. Polio has been largely eradicated in developed countries but remains endemic in Nigeria, Pakistan and Afghanistan. The BBC’s Imogen Foulkes in the Swiss city of Geneva says there has been speculation that foreign groups fighting in Syria may have imported it.”

Let’s say with this example we are interested in tracking disease outbreaks that are occurring globally. The two entities that would be the most valuable would be diseases and places. With traditional tagging techniques, we would then extract:

- Places: Syria, Nigeria, Pakistan, Afghanistan, Geneva

- Diseases: Polio

With this level of extraction of co-mentions, an analytic engine may make some false assumptions. It may say that Syria, Nigeria, Pakistan, Afghanistan, and Geneva have had recent outbreaks of polio, which is inaccurate as only Syria is mentioned in this article to have documented cases.

BrightPlanet’s extraction engine has the ability to help you take the data a step further to find relationships between entities. With relationship tagging between two entities, we tag both entities and then also identify if they are related. We do this by examining if there are predicates between them. A predicate when relating to entity extraction is an expression that gives a property to an entity, oftentimes that property is a relationship to another entity. Since a predicate exists that relates polio to Syria, a disease outbreak entity is then tagged as: polio/Syria. See below for the visual.

This relationship data allows us to get much more precise with the entities that we are tagging and the data we are hoping to model. The fact that the tagged entities are customizable allows for extraction, tagging, and analysis to help solve problems in a wide range of industries.

Semi-Structured Data

Now that the enrichment process is complete, the data has been transformed from an unstructured format to a semi-structured data set. Below is an example of the content on a target webpage in a semi-structured format. Having extracted entities and semi-structured data sets allows more opportunity for analytic tools to make sense of harvested and collected data.

An easy example to use is the harvested data set of every news story from the top 50 newspapers in the United States in the last month. Using semi-structured data sets (data sets that have been normalized and entity extracted), getting the answer to the question – “What person was most mentioned in the news this last month?” – becomes fairly straightforward.

Working with harvested and enriched semi-structured data sets from BrightPlanet allows these tough questions, ones that seemed far too time consuming and complicated to do manually, much more manageable to answer.

What Next?

Want to follow the data from harvest to analytics? Download our Transforming Unstructured Data whitepaper.

Have a project that could be made efficient by harvesting and enrichment? Sign up for a free demo with one of our data acquisition engineers.

//